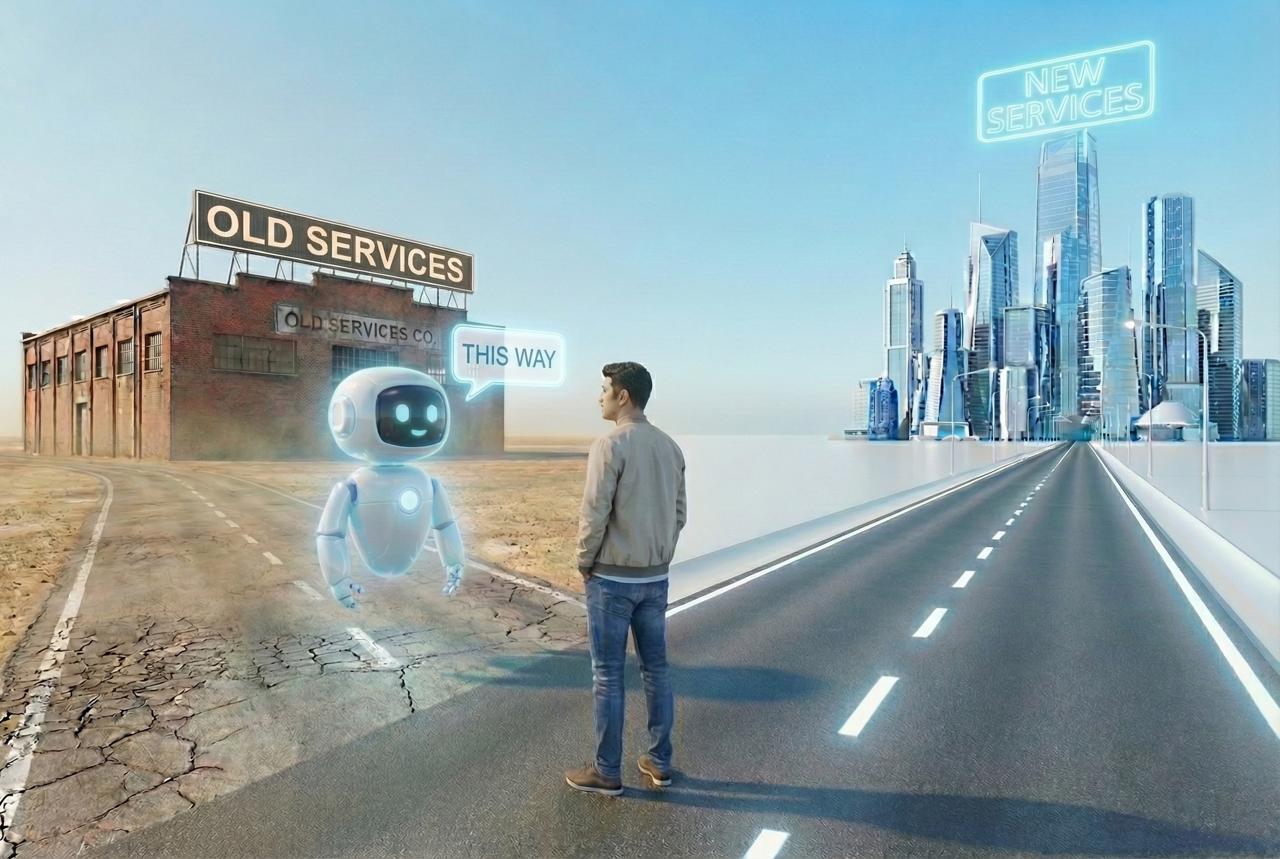

AI platforms continue recommending companies for services they discontinued months or years ago because training data lags behind real-world changes. You made the strategic decision to pivot, updated your website, revised your messaging, and trained your team on the new positioning.

The pivot is complete on your end, but AI platforms didn't get the memo.

ChatGPT, Claude, Gemini, and Perplexity now influence how prospects discover and evaluate companies. Consulting firm McKinsey found that 44% of AI-powered search users say it's their primary and preferred source, topping traditional search engines (31%), brand websites (9%), and review sites (6%). When someone asks an AI platform for a recommendation in your category, that platform draws from training data that may predate your pivot by months or even years.

The result: prospects contact you asking for services you no longer provide. You spend time on discovery calls explaining what you actually do now. Your sales team rejects or redirects leads that should never have reached you.

This isn't a temporary glitch; it's a structural feature of how large language models acquire, retain, and update information about companies.

We're experiencing this at our own agency. RankScience started as an A/B testing platform (Y Combinator W17) and evolved over the years into a pure SEO and AI search agency specializing in generative engine optimization. We completely updated our website. There isn't a single mention of A/B testing or PPC services anywhere on our current site.

Despite this, AI platforms continue recommending us for services we discontinued:

RankScience discontinued A/B testing software and PPC services in 2024. We are now a pure SEO and AI search optimization agency.

The disconnect creates real business problems. Prospects reach out asking about services we don't offer. We spend time on emails explaining our actual focus. Some leads we simply have to turn away. This wastes sales capacity that should be spent on prospects who need what we actually provide.

One of our current clients just completed a rebrand with a new company name and domain. After the switch, we conducted a comprehensive AI visibility audit of their old and new brands. The results confirmed what we suspected: AI platforms were still referencing their old company name and previous service offerings. They're now in the early stages of the same lag we've been experiencing, watching AI platforms confidently recommend them for their former positioning while their new brand identity sits invisible to these systems.

The pattern is consistent: the more successful your old positioning was in generating online presence and authority, the more persistent it becomes in AI training data.

Your old positioning persists in AI recommendations because platforms train on massive datasets compiled months before your pivot or name change. AI platforms don't learn about your company in real time.

Source: Platform documentation and publicly stated knowledge cutoffs. Cutoffs and browsing behavior vary by product mode and settings.

A comprehensive study published in Nature Scientific Reports found that time-based model degradation occurred in 91% of cases across 32 real-world machine learning (ML) datasets. While this research examined deployed machine learning models broadly rather than LLMs specifically, the freshness problem is analogous: AI systems of all types struggle when their training data ages. This degradation isn't gradual and predictable. Some models show "explosive aging patterns" where performance remains stable for extended periods before abruptly declining. Your company information may appear current in AI responses for months, then suddenly become dramatically outdated as the model crosses a performance threshold.

Research on clinical accuracy found that training data freshness determines reasoning accuracy across multiple AI model families. The finding was striking: how recent the training data is, rather than model size or architecture, is the dominant factor determining reasoning accuracy in large language models. Models trained before June 2023 showed accuracy as low as 76%, while those trained after showed 95-98% accuracy on the same questions.

The implication is direct: If outdated information about your positioning exists in training data, AI models will confidently present that outdated information regardless of how sophisticated the underlying architecture becomes. No amount of technical advancement overcomes stale training data.

Web search capabilities in AI platforms provide only partial and inconsistent relief from training data persistence. You might assume these systems would simply look up your current information, but the reality is more complicated.

Retrieval-Augmented Generation (RAG) is a technique that connects AI models to external, up-to-date knowledge sources by retrieving relevant information before generating a response. Amazon Web Services (AWS) documentation explains that RAG pulls from new data sources before generating responses. This sounds like a solution.

However, research demonstrates a critical limitation: knowledge conflicts between retrieved data and training memory. When fresh information from external sources contradicts the AI's older internal knowledge from training, models may ignore the retrieved context and default to their training data memory. Think of it like muscle memory versus reading a cue card: the AI can read the new information (web search), but under pressure it defaults to what it practiced thousands of times during training. This is particularly problematic for entity information where the model has strong prior associations, which is exactly the situation companies face after rebranding or pivoting.

Even with perfect RAG implementation, an AI might continue presenting your old company positioning because its training data associations are stronger than retrieved updates. The model has seen thousands of references to your old positioning during training. A few new web pages saying something different may not override that accumulated weight.

Platform behavior varies significantly:

This variability means your current positioning may appear on one platform while outdated information persists on another, creating an inconsistent experience for prospects researching your company across multiple AI tools.

The evidence points to specific timeline expectations for how long outdated information persists after a pivot or rebrand.

Platforms that aggressively use real-time web search may surface your new positioning relatively quickly. Perplexity's architectural approach of defaulting to live search makes it the fastest to reflect changes. If your updated website is well-indexed and authoritative, these systems can begin showing current information within a few months.

However, this requires your new positioning to be clearly established across multiple authoritative sources. A single updated About Us page on your company website may not override months of training data pointing in a different direction.

Major platforms like ChatGPT and Claude undergo periodic model updates that incorporate new training data. The lag between events occurring and appearing in training data has historically ranged from 4-12 months depending on the platform and update cycle.

Research also shows that effective knowledge cutoffs can differ from stated cutoffs by 3-6 months due to how data is collected and processed. A model claiming an August 2025 cutoff may functionally have gaps for information from May or June 2025, particularly for less prominent entities or recently changed information.

During this period, your old and new positioning coexist across different AI platforms. One system confidently recommends you for discontinued services while another correctly describes your current focus. Prospects get different answers depending on which AI tool they use and how they use it.

Companies with strong historical presence in AI training data face the longest correction timelines. The more successful your old positioning was, the more references to it exist across the web sources AI models train on. Wikipedia entries, press coverage, forum discussions, and third-party mentions all reinforce associations that your updated website alone cannot override.

Research on knowledge graph entity disambiguation shows that ambiguous entities require consistent signals across contexts to be correctly identified. If your old positioning appears in dozens of authoritative sources while your new positioning exists primarily on your own website, AI systems may continue prioritizing the more widely corroborated (but outdated) information.

For companies with extensive historical coverage, full propagation across all AI ecosystems may take 18-24 months or longer.

The practical impact of AI training data lag manifests in your sales pipeline.

Mismatched leads waste sales capacity. Prospects contact you based on AI recommendations for services you no longer provide. Your team spends time on discovery calls only to explain that you've pivoted. Some conversations simply end when prospects realize you don't offer what they need. This represents real cost: time your salespeople could spend on qualified prospects, and potential damage to your reputation when prospects feel misled (even though the AI platform, not you, provided incorrect information).

Competitive positioning erodes during the lag. While AI platforms associate you with legacy services, competitors may be capturing the category language for your current offerings. If you pivoted from general marketing consulting to specialized revenue operations but ChatGPT still describes you as a marketing agency, prospects searching for RevOps help get directed elsewhere. First-mover advantage in your new category erodes while you wait for AI systems to catch up.

Confusion compounds across touchpoints. A prospect might see your current positioning on your website, then receive conflicting information from ChatGPT, then see something different again on Gemini. This inconsistency creates doubt about what you actually do. In B2B sales, confusion delays decisions and gives competitors an advantage.

Research from IBM, the technology and consulting company, found that AI now acts as a gatekeeper in customer discovery. For companies with outdated positioning in AI training data, potential customers may never be exposed to current offerings, creating a systemic lead quality problem that marketing alone cannot solve.

The 6-18 month timeline is largely outside your control. What you can control is how consistently you signal your current positioning across the sources AI platforms reference.

RankScience LLC

2443 Fillmore St #380-1937,

San Francisco, CA 94115

© 2026 RankScience, All Rights Reserved