Table of Contents

The Mention-Source Divide: AI Uses Your Content But Names Someone Else

You did everything right: published the definitive guide, added original data, and even earned citations in AI answers.

Then a prospect asks, "What tool should I use?" and the AI recommends your competitor, using your research to justify the choice.

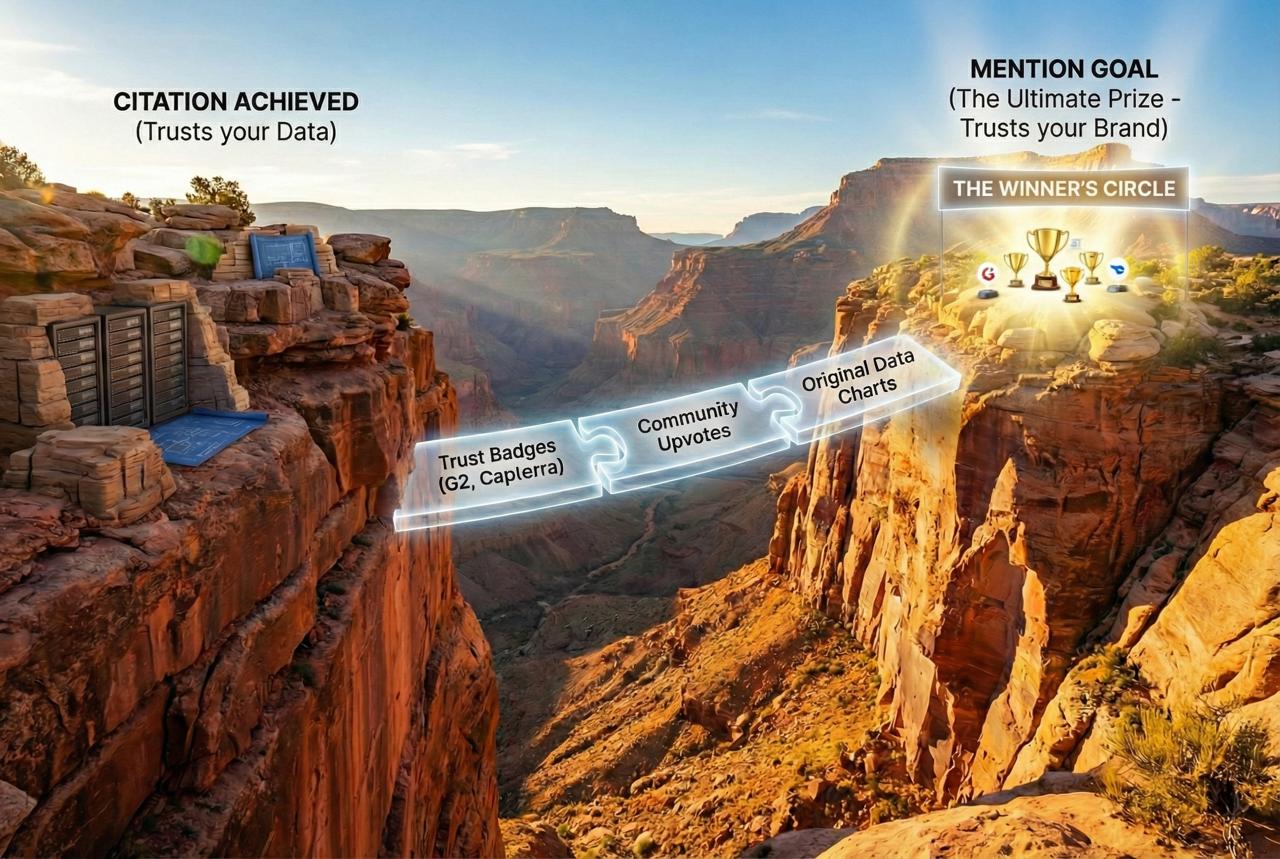

SEMrush, the enterprise SEO platform, calls this the "Mention-Source Divide." Understanding the difference between these two signals is critical:

- Citation: When an AI platform links to your content as a source, essentially footnoting your research in its response. Being cited means AI systems trust your data.

- Mention: When an AI platform names your brand directly in the answer as a recommendation. Being mentioned means AI systems trust your brand enough to recommend you.

If you're only cited, you're informing the market while competitors get the buyer's attention.

Here's how the two signals differ:

What it is

AI links to your content as a source

AI names your brand in the answer

What it signals

Your data is trusted

Your brand is trusted

Where it appears

Footnotes/source list

Body of the response

Pipeline impact

May send some traffic

Puts you on the shortlist

What it takes

Useful, accurate content

Consistent brand signals across trusted platforms

Most businesses have achieved only the first part.

Quick Test: Are You Being Cited But Not Recommended?

Ask AI platforms directly about your category:

- "What are the best [your category] tools?"

- "[Your brand] vs [competitor]"

- "Who should I use for [problem you solve]?"

If your domain appears in citations but your brand doesn't appear in the answer, you have a Mention-Source Divide problem. If you're neither cited nor mentioned, it's a content discoverability problem first.

You publish a guide, AI cites it, but when the answer turns into a shortlist, your brand disappears.

When Your Content Gets Credit But Your Brand Doesn't

Being cited without being mentioned is far more common than achieving both. AirOps, an AI workflow automation company, analyzed citation and mention patterns across AI-generated content and found brands are 3x more likely to be cited alone than to earn both signals. Their research confirmed that being a source is common while recommendations are rare.

Here's a concrete example: A prospect asks ChatGPT, "What's the best project management software for remote teams?" The AI names Asana, Monday.com, and ClickUp in its answer. At the bottom, it cites your comprehensive comparison guide as a source. Your content did the work. Your competitors got the recommendation.

Consider the mechanics: when someone asks an AI platform for software recommendations, the platform synthesizes information from multiple sources. Your detailed comparison guide might provide the framework for the answer. Your pricing research might inform the cost discussion. But when the AI names specific tools to consider, it draws from a different evaluation process where your brand may not qualify.

Key Insight:

Citations and mentions serve different pipeline functions. Citations may send some traffic through source links, but mentions put your brand name directly in front of potential customers at the exact moment they're evaluating options.

Understanding the gap is step one. Step two is understanding why it exists.

How AI Decides Who Gets Cited vs Who Gets Recommended

AI platforms evaluate content through two separate lenses:

- Evidence check (earns citations): Is this information accurate and useful enough to reference?

- Recommendation check (earns mentions): Does this brand show up consistently in trusted places as a real solution?

Most content marketing strategies train only the first check. Brands create comprehensive guides, publish original research, and build topical authority, all activities that earn citations. But citation-worthy content doesn't automatically translate into recommendation-worthy brands.

Superlines, an AI visibility platform, frames the distinction clearly: citations prove authority and send traffic, but mentions shape perception, trust, and shortlists before users decide where to click or which product to choose. When someone asks an AI platform for recommendations, being mentioned is what puts you on the shortlist.

Why Brand Mentions Now Outweigh Backlinks for AI Visibility

Traditional SEO trained marketers to prioritize backlinks as the primary authority signal, and backlinks still matter for Google rankings. But AI visibility operates differently. Brand mentions, even without links, predict AI platform recommendations more accurately than backlink profiles.

Mentionlytics, a brand monitoring platform, explains the mechanism: AI models predict answers based on patterns and recurring brand references rather than searching the web like Google. When your brand appears consistently in discussions, reviews, and industry coverage, AI systems learn to associate your brand with relevant topics regardless of whether those mentions include links.

Key Insight:

The shift from building links to earning mentions represents a fundamental change in off-page optimization strategy. Content partnerships, industry coverage, community presence, and review platforms now matter more than link acquisition for AI visibility.

What Separates Brands That Get Mentioned From Those That Just Get Cited

The combination of citations and mentions creates compounding visibility that citation-only brands never achieve. AirOps found that brands achieving both signals are 40% more likely to resurface in consecutive AI responses, and that the combination creates consistency while single-signal presence is unpredictable.

Now that you understand why the gap exists and why mentions matter, here's how to close it.

Three Paths to Close the Gap Between Citation and Mention

Mentionlytics identifies the three paths that earn mentions:

Build presence on trusted third-party platforms.

AI systems learn to mention brands that appear consistently on platforms they already trust. One strong mention beats ten forgettable ones elsewhere.

- First move: Identify 3-5 sources that appear in AI citations for your category (G2, Capterra, relevant subreddits, industry publications), then prioritize getting your brand mentioned with category context on those specific platforms.

- Success signal: Your brand name appears in the body text of trusted sources (not just author bios or ads), associated with the problem you solve.

Publish original research and case studies.

Brands regularly publishing proprietary data become more mention-worthy because AI systems can cite specific findings and associate them with your brand.

- First move: Publish one proprietary metric or benchmark quarterly, ideally with a branded name that AI can reference (like "HubSpot's State of Marketing" or "Salesforce's State of Sales").

- Success signal: AI answers paraphrase your data and attach your brand name to it.

Engage authentically in community discussions.

Reddit, Quora, and industry forums influence AI mentions because people discuss problems and solutions openly there. AI takes note when brands show up organically rather than through promotional content.

- First move: Create a "helpful account" approach: answer questions, share insights, and solve problems without pitching. Focus on threads where your category is being discussed.

- Success signal: Other users mention your brand unprompted in replies, or your answers get upvoted and referenced.

SE Ranking, an enterprise SEO platform, adds a practical starting point: identify where AI already looks for information and secure placements there.

How to Track Your AI Visibility Gap

Beyond the quick test, AI visibility tools like SEMrush's AI Visibility Overview show queries where your domain appears in AI-generated responses. Look for patterns where your content is being used as a source but your brand isn't being recommended.

What to track:

- Mention rate: Percentage of target prompts where your brand is named in the answer

- Citation rate: Percentage where your domain is cited as a source

- Citation-without-mention: The classic Mention-Source Divide pattern

- Mention-without-citation: Indicates strong off-site brand signals

What good looks like: As you close the gap, you'll see your brand mentioned more consistently across category prompts, with presence across at least 2 major platforms.

How often to check: Monthly for competitive categories, quarterly for stable industries. Track 15-20 prompts per product category across ChatGPT and Perplexity at minimum.

Why You Need to Test Across Multiple Platforms

Each AI platform sees a different version of the web. Joshua Blyskal, an AI visibility researcher, analyzed 100,000 prompts across ChatGPT and Perplexity and found only 11% domain overlap between the platforms. The same questions, asked on different platforms, draw from almost entirely different source pools.

Search Engine Roundtable, an SEO news site, reported on a study from Profound, an AI search analytics company, documenting platform-specific source preferences:

ChatGPT

Wikipedia (47.9%), Bing index

Favors established databases

Perplexity

Reddit (46.7%), real-time news

Favors user-generated content

Google AI Overviews

Diversified across Google's index

Broader source mix

What this means in practice: optimizing for ChatGPT requires different tactics than optimizing for Perplexity, and success on one platform doesn't predict success on another.

Key Insight:

Not being cited and not being mentioned are two distinct problems requiring different solutions. If you're not being cited, you have a content authority problem. If you're cited but not mentioned, you have a brand positioning problem.

AirOps documented that only 30% of brands stay visible across consecutive answers. Inconsistent appearance, even when occasionally mentioned, indicates weak brand signals that need strengthening.

The bottom line:

Your content is already doing the hard work of informing AI responses. The question is whether your brand gets credit for it. The brands closing this gap are the ones shifting resources from content production alone to brand positioning across the platforms AI systems trust.

See Where You Stand

The brands closing this gap now are the ones prospects see when they ask AI for recommendations. The brands ignoring it are becoming invisible training data for competitors.

RankScience's AI visibility audits show how ChatGPT, Gemini, Perplexity, and Google AI Overviews currently describe your company, and where competitors are winning.

Frequently Asked Questions

Citations are footnotes: AI links to your content as supporting evidence for its answer. Mentions are endorsements: AI names your brand directly in the response when users are choosing what to buy. Only 28% of brands achieve both in AI responses.

AI platforms evaluate content usefulness separately from brand recommendation worthiness. Your content may provide excellent reference material while your brand lacks sufficient signals: consistent mentions across trusted platforms, original research tied to your name, or community presence. Brands facing this gap need brand positioning beyond content quality.

Brand mentions correlate 3x more strongly with AI visibility than backlinks according to Ahrefs data. AI systems train on raw text rather than hyperlink graphs, so unlinked mentions in reviews, industry coverage, and community discussions influence AI recommendations more than link building. This represents a fundamental shift in off-page optimization for AI search.

Yes. Analysis of 100,000 prompts showed only 11% domain overlap between ChatGPT and Perplexity citations. Each platform indexes the web differently: ChatGPT uses Bing's search index, Perplexity uses vector indexing, and Google AI Overviews draws from its own index. Brands need platform-specific strategies since success on one doesn't guarantee visibility elsewhere.

Use an AI visibility tool like SEMrush, which shows where your content appears in AI-generated responses and whether your brand is being mentioned or just cited. Supplement with manual testing by asking AI platforms category questions and tracking whether your brand appears in answers versus only in citations. Compare results across multiple platforms.