Programmatic SEO sites are failing at unprecedented rates. It's not just spam sites; major publishers and market leaders like G2, a software review platform, have lost around 80% of their traffic. Even established brands with years of programmatic success are watching rankings disappear.

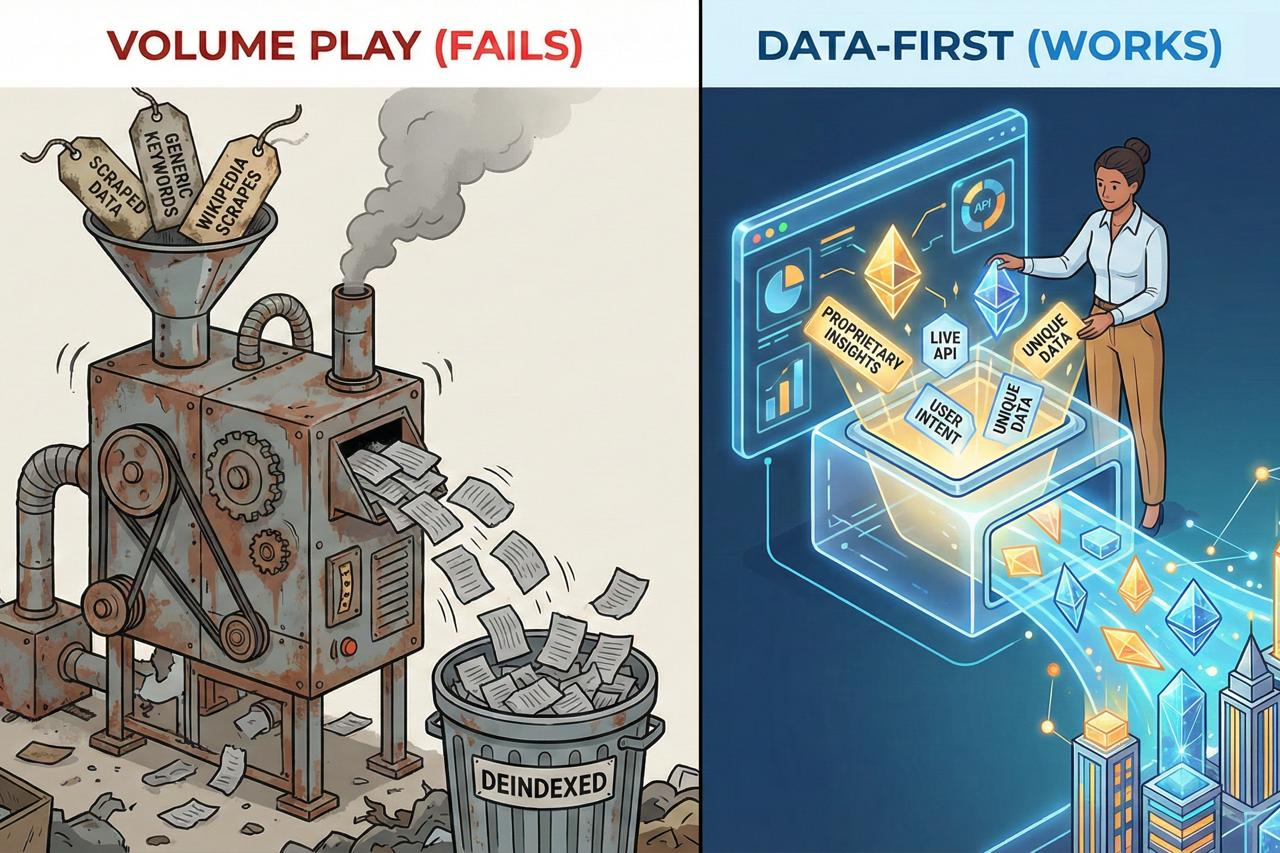

Programmatic SEO as a volume strategy is broken, but programmatic SEO itself isn't dead. It still works when it is built on proprietary data and designed for users, not search engines.

The difference between failure and success comes down to one question: Do you have unique data that Google wouldn't count as thin content?

The Google scaled content abuse penalties target user value, not the method used to create pages.

The Science Behind the Penalty

Peer-reviewed research confirms that "value" is measurable, not subjective. Generative Engine Optimization (GEO) research from Princeton University and IIT Delhi found that content visibility improves significantly when it includes citations, quotations, and unique statistics. Generic templates fail because they lack these specific trust signals. Similarly, Stanford University research shows that human evaluation remains the gold standard for nuanced tasks, confirming that while AI can handle volume, human strategy is required for differentiation.

Google's Official Stance

Google's March 2024 scaled content abuse policy states that producing content at scale primarily to boost search ranking is abusive, "whether automation, humans or a combination are involved." The policy explicitly targets outcome rather than method. John Mueller, Google Senior Search Analyst, called programmatic SEO a fancy banner for spam when the goal is manipulation rather than value.

Danny Sullivan, Google Search Liaison, reinforced this: Google doesn't care whether content is AI, automated, or human-created; "it's going to be an issue if it's low-value at scale."

Implication: Sites fail not because pages are programmatic, but because they lack the specific trust signals (stats, quotes, original data) that distinguish valuable content from platforms with genuine user content.

Three specific failure patterns explain why most programmatic SEO implementations fail: thin content, template homogeneity, and keyword-first (not user-first) strategy.

Intent mismatch between page content and user needs guarantees failure regardless of template quality. G2's programmatic pages answered "What is the pricing?" while users actually wanted "Is this software actually good?" This fundamental intent mismatch meant no template optimization could fix the problem. G2 lost 80% of its traffic, with Reddit, a social news and discussion platform, now ranking for 95% of the searches G2 previously owned.

Reddit ranks for most software review queries G2 once owned because it provides authentic user experiences rather than aggregated specifications. Google AI Overviews and search features tend to exclude G2 because its pages lack substantive editorial insight that addresses real user questions.

Beyond traditional template failures, a new AI-powered variation of this pattern emerged recently.

Recently, some companies shifted from optimizing for Google rankings to generating content designed primarily to be cited by AI platforms (LLMs), creating an "AI-model-first" approach that prioritizes perceived LLM citation triggers over solving real user problems.

The fundamental flaw: Google's systems still control organic visibility. AI platforms predominantly cite content that already ranks well in traditional search, with around three-quarters of AI Overview citations coming from the top 10 organic results. Platforms prioritize established, authoritative domains and quality signals over "optimized for AI" templates.

The detection pattern: Large sets of nearly identical pages distinguished only by keywords create template homogeneity that both algorithms and human readers detect. Starting from "what keywords can we target?" instead of "what problems can our data solve?" produces intent mismatch that rankings can't fix.

Result: sites briefly get some AI citations, then face deindexing or heavy demotion because they fail Google's people-first standard.

Given these failure patterns, success requires a fundamentally different approach.

The answer comes down to whether you have unique data assets that competitors can't replicate.

Ask yourself: Do we have proprietary data that competitors can't easily replicate? Can our data provide insights users can't get elsewhere? Is our data live or regularly updating, rather than static snapshots?

Real estate: MLS feeds, neighborhood statistics, historical pricing trends, live inventory data

SaaS integrations: App compatibility matrices, integration requirements, real usage patterns, tested workflows

Marketplaces: Live inventory, pricing comparisons, availability by region or segment, demand signals

Financial services: Real-time rate comparisons, proprietary qualification calculators using internal rules, personalized product matching

Healthcare: Provider directories with quality metrics, treatment outcomes data, facility-level performance statistics

What doesn't qualify: Public information repackaged from Wikipedia or vendor sites, keyword research-driven content with no underlying dataset, aggregated reviews from other platforms without added analysis, or template pages where only the location or keyword is swapped.

The dividing line is clear: successful implementations start with unique data, failed ones start with keyword lists.

Companies succeeding with programmatic SEO share three attributes: proprietary data competitors can't replicate, human oversight on every page, and user intent-first positioning.

Zillow (real estate marketplace platform): Starts with exclusive MLS and real estate data to power property and neighborhood pages with live pricing, inventory, and trend information. Human-designed navigation and comparison tools help buyers answer real questions like "Is this area right for me?" rather than just displaying raw data. Shows how starting with exclusive data and maintaining human quality control throughout the system drives success.

Zapier (workflow automation platform): Maintains proprietary app compatibility matrices and tested workflows that competitors can't easily replicate. Programmatic pages deliver unique value by answering specific jobs-to-be-done such as "how to connect tool X and Y to automate Z," not just "does it integrate?" Demonstrates how template-driven automation using structured database fields creates scalable, unique pages without relying on AI generation.

NerdWallet (financial comparison platform): Built on proprietary calculators and real-time rate data that personalize financial product comparisons for individual users, not just listing generic interest rates. Human-authored guidance explains which products fit specific financial situations. Exemplifies how human-written introductions prevent the template feel even at scale.

Canva (graphic design platform): Relies on a proprietary template library and usage analytics to build pages around concrete design needs (pitch decks, resumes, social posts). Human categorization and design guidance ensure each template page solves a specific creative problem, not just targets a keyword. Shows how database-driven programmatic pages can scale to thousands of templates using structured metadata and template categorization.

The common thread: exclusive data assets combined with human oversight internally or from an experienced SEO agency at every stage, not templates with AI generation and light human editing.

These success models share a common implementation approach. Here's the blueprint they follow:

1. Data-First Strategy Start only if you have the exclusive datasets described above. If everything is available elsewhere, you don't have a real programmatic opportunity yet.

2. Human-Authored Hooks Humans should write concise intros that explain why the data on each page matters and how to use it, preventing the "template feel."

3. Unique Value Per Page Every page must solve a specific user problem. If no one would pay for the insight or tool on that page, it isn't differentiated enough.

4. AI Assistance for Acceleration Use AI to standardize formatting, connect datasets, and update at scale. The data is the content, AI is the distribution and polish layer.

5. Human Quality Control Final human review ensures the page passes the "unique data that Google wouldn't count as thin content" test and offers more value than a few minutes of basic Google searches.

RankScience LLC

2443 Fillmore St #380-1937,

San Francisco, CA 94115

© 2026 RankScience, All Rights Reserved